I’ve spent the past few months assembling and creating a Kubernetes (K8s) cluster running on several Raspberry Pi 4s. It is backed by a Synology NAS for networked storage. Along the way, I’ve learned a bunch of stuff about managing infrastructure. Most importantly, I’ve learned the value of a managed K8s instance from a cloud provider, as there is a bunch of operational overhead to installing all this stuff that automation helps with, but doesn’t ultimately resolve.

What?

By far the most common question I receive from non-Software Engineers when describing this project is “what does it… do?” I don’t ever have a satisfactory answer to that question. My answer usually goes something like this.

Trying to Explain What Kubernetes Is

As a Site Reliability Engineer at an organization that is undergoing a trendy “digital transformation”, I have a lot experience interfacing with technical and non-technical people about what K8s is. It doesn’t always go well. As the current “in” technology in the SaaS world, there’s a lot of misconceptions and opinions when it comes to K8s. The operational definition that the official documentation provides is: “Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation.” This is a pretty good definition for a Software Engineer. When explaining this to my mother, however, what a “containerized workload” is isn’t exactly obvious.

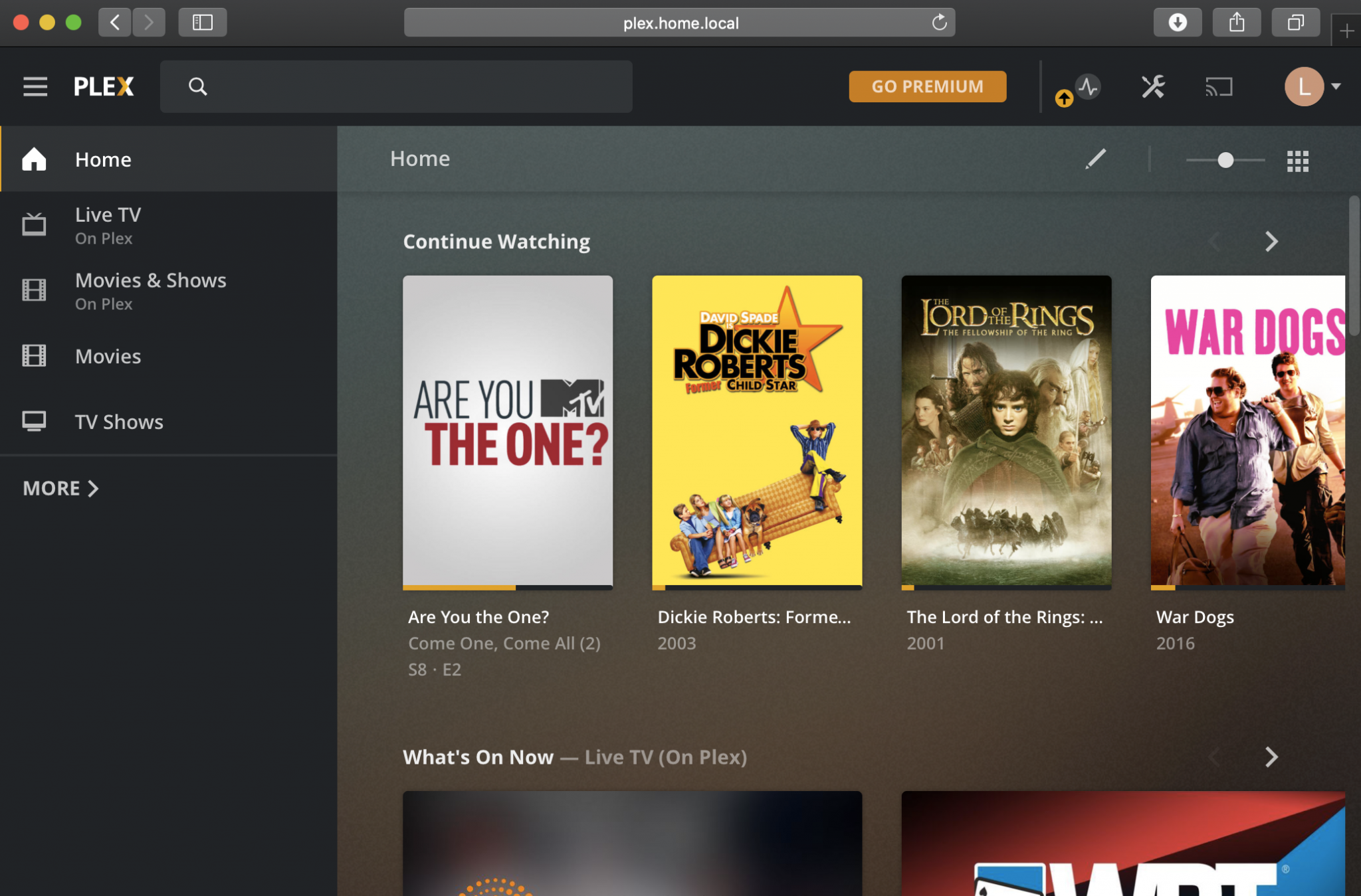

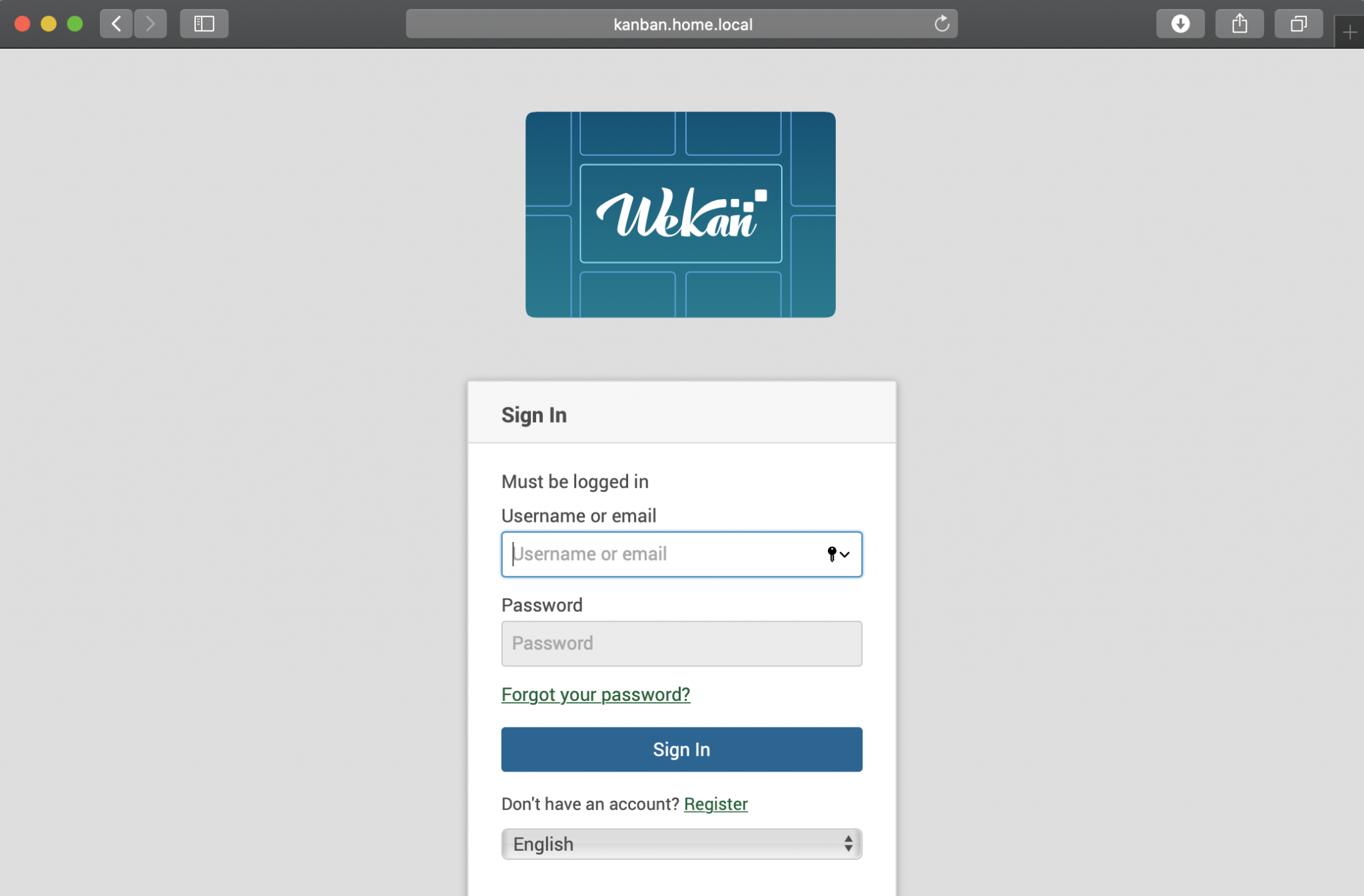

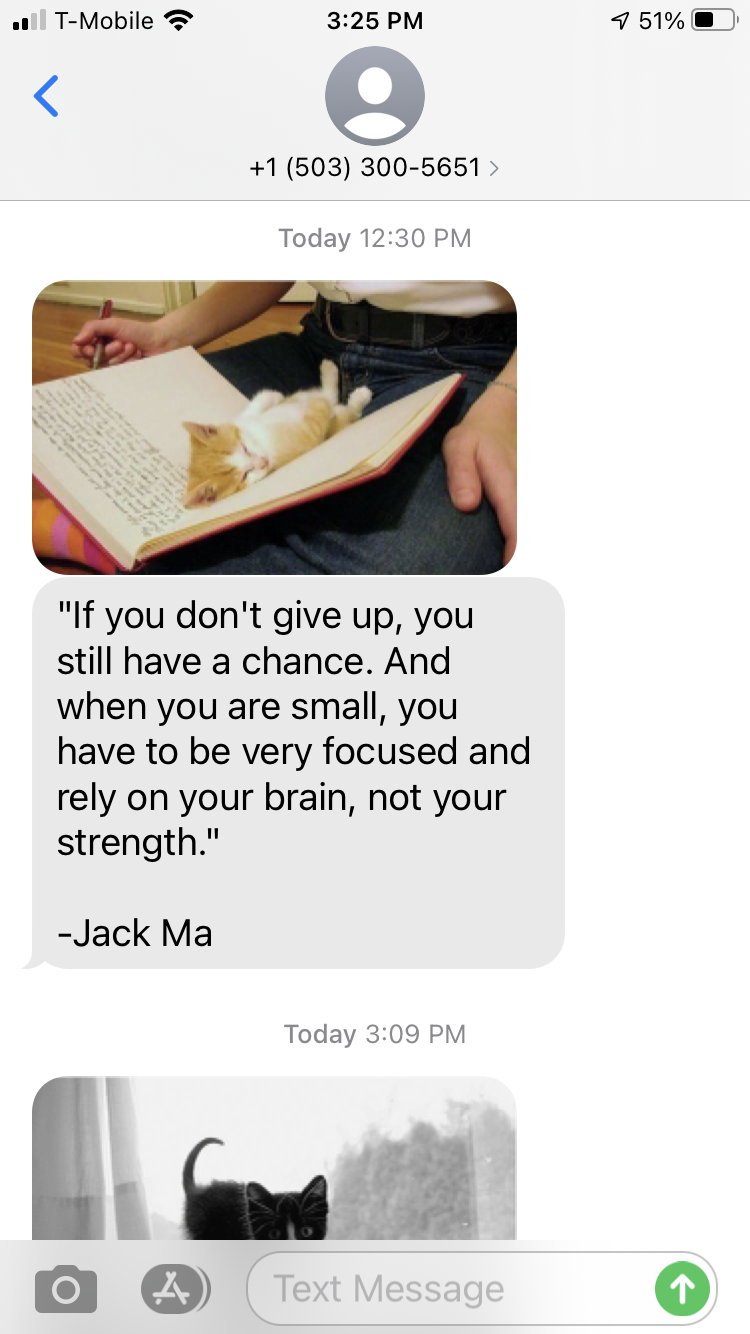

By itself, K8s doesn’t do anything tangible. It is simply a platform on top of which you can run arbitrary code. So for the purposes of explaining to my girlfriend why I spent a couple hundred dollars and many hours of my time to create a working cluster in my apartment, its not really helpful to hide behind the official documentation. Some of the value that this brings is demonstrated by some of the simple applications that I’ve deployed to it. Without significant development effort, I implemented an interactive kanban board using Wekan and a Plex Media Server on my home network. In addition, I created a small service that sends text messages using Twilio and the quote of the day API.

Some of the services running on my K8s Cluster

After showing some of those, it is usually not too much of leap for most to conceptualize this cluster as a sort of “thing-doer”. It is something that runs various services within my house. As long as you avoid the nuance of what makes Kubernetes have so much business value, it’s really not tough to understand. Of course, that obscures all the complexity.

But why?

Trying to Explain Why I Did This in my Apartment

After listening to a long spiel about the brave new world of cloud-native technology that k8s represents, most non-engineers have largely tuned out. If I start saying things like “availability”, “containers”, “resiliency”, their eyes glaze over. Fortunately, I have a few engineers in my social circle, and am lucky enough to have at least one that is genuinely curious as to why you might run k8s at home. To be honest, here’s no real reason. I have a few canned answers that are all partially true. They go something like:

It helps professionally

This one is the most honest. In my current job I am tasked with management and administration of the various deployment pipelines and infrastructure powering several of my company’s software services. Like many companies, there is application code that we choose to use Docker to create application container images, K8s to orchestrate them, Infrastructure as Code (IaC) with Terraform to ensure repeatability, and Continuous Integration and Delivery (CI/CD) to automate the release process. Unfortunately, it has been observed that not all developers will keep reading after “there is application code”. Despite the ongoing war on the traditional software development lifecycle, most of the operational responsibility still falls up the SRE/DevOps-minded folks, thus “freeing up” more cycles for developers to focus on “value delivery”. What does this mean for my home K8s cluster? I wanted a way to continually sharpen the DevOps sword and gain experience with K8s without involving an expensive cloud bill. Experimenting and messing around with my company-owned AKS cluster is… discouraged. Believe me. I tried.

By focusing on creating a repeatable and automated deployment of a somewhat novel infrastructure (bare metal Raspberry Pis), I was able to use some tools that I knew to solve a novel problem: not having a k8s cluster in my apartment. Creating this project forced me to brush up on my Linux sysadmin knowledge, my networking topologies, storage concepts, k8s administration, and kernel woes (why is it so hard to find Docker images for arm64 architectures?).

It gives me a platform to run my own code

I have a bunch of half-finished programming projects in my GitHub. I haven’t deleted them for some masochistic belief that I will one day return and finish them. While I’m almost certain that I never will, at least now I have a platform to run them on.

As someone who is constantly overwhelmed by the amount of new technologies and tools that I am expected to know, I’m often trying out new things and getting frustrated and putting them down forever.

With a self-contained cluster that I have full control over, I don’t have to involve any convoluted processes or actual expenses to immediately see the results of my development on a “live” environment. While I have to develop conscientiously (only develop that which can run on ARM architectures), the miracle of Docker and k8s allows me to develop arbitrary code, package it up into a docker image and ship it to my own cluster using nothing but my laptop and the command line. Involving GitHub Actions allows me to build my docker containers automatically. See here for an example. I can replicate a really naive CI/CD system! And now I have my own sandbox to do it in!

It’s Fun!

This is the only real reason. I like experimenting. Kubernetes is a cool technology and the fact that I can mess around with it in a no-pressure environment for a relatively modest start up cost is incredible. If I were truly serious about creating a service for the purpose of making a business out of it, I sure as shit wouldn’t run it on a bookshelf in my apartment using $35 hardware. I do it for the hell of it! One day, I’ll probably find something worth running on it but until then, I’ll keep tinkering with knobs and switches until I get just that much more performance out of whatever the heck I’m running on it that week.

Conclusion

I still don’t have a business-friendly answer to “why”, but at least this post will kind of illuminate “what”. Long story short: I built a thing-doer (that doesn’t do anything useful yet) for fairly cheap (when compared to a business’ cost) to learn things (that I do at work every day) and for fun (and to learn to cope with frustration).

For technical details, see this follow up post about the actual implementation.