There are a lot of important books that are recommended for software developers. A data-driven answer about which programming books get recommended online revealed a lot of the usual suspects. The top five are commonplace books that every developer should have on their shelves:

- The Pragmatic Programmer by David Thomas and Andrew Hunt

- Clean Code by Robert C. Martin

- Code Complete by Steve McConnell

- Refactoring by Martin Fowler

- Head First Design Patterns by Eric Freeman, Bert Bates, Kathy Sierra, and Elisabeth Robson

One of the books that didn’t make that list is The Design of Everyday Things by Don Norman. Although somewhat dated in publication (originally appearing in 1988, updated in 2002 and 2013), the design insights are not. It is a refreshingly simple and elegant look into the psychology behind why design principles are critical in creation of anything that will be used by humans. After reading it recently, it got me thinking about where usability fits into the work that SREs, Platform Engineers, and other “internal tooling” type jobs are tasked with.

Usability in Software

Usability in software is widely studied. UX/UI has blossomed into a profession tasked with good, intentional software design. The US Government even has a website fully devoted to design principles. Of course, in true US government fashion, one of the featured links from the home page is broken. In addition, it has several web elements with no discernable purpose or usable features.

Dunking on the GSA’s Usability office aside, the importance of usability in customer-facing software is well understood. The general population that uses most software has no concern for the inner workings of said software. In the attention economy, sleek software is valued above powerful software. The ultimate prize is fully featured software that is easily usable. Developing products for other developers is no exception. While many fall back on the platitude “Read the Docs”, the truth is that, in general, even developers will prefer an easily usable product over a harsh product, even if the harsh product has more capabilities. Obviously, this has some exceptions and limitations, but in my experience the general concept has held true.

Usability When your Users are Developers

I work on an application delivery platform for software engineers internal to my company. As an organization going through a “digital transformation”, we are attempting to embrace the Scaled Agile Framework for Enterprises. I have my own doubts and reservations about the viability of SAFe due to its attempt to unite two antithetical methodologies, but that’s a topic for another post. In short, there are a few critical background points for understanding the transformation.

- The company is attempting to go from a traditionally siloed development and operations model to a united DevOps workflow

- Outside experts have been hired to “jump start” the digital transformation by building an internal delivery platform using modern tooling

- Many developers are now asked to use this platform as well as learn the underlying technology

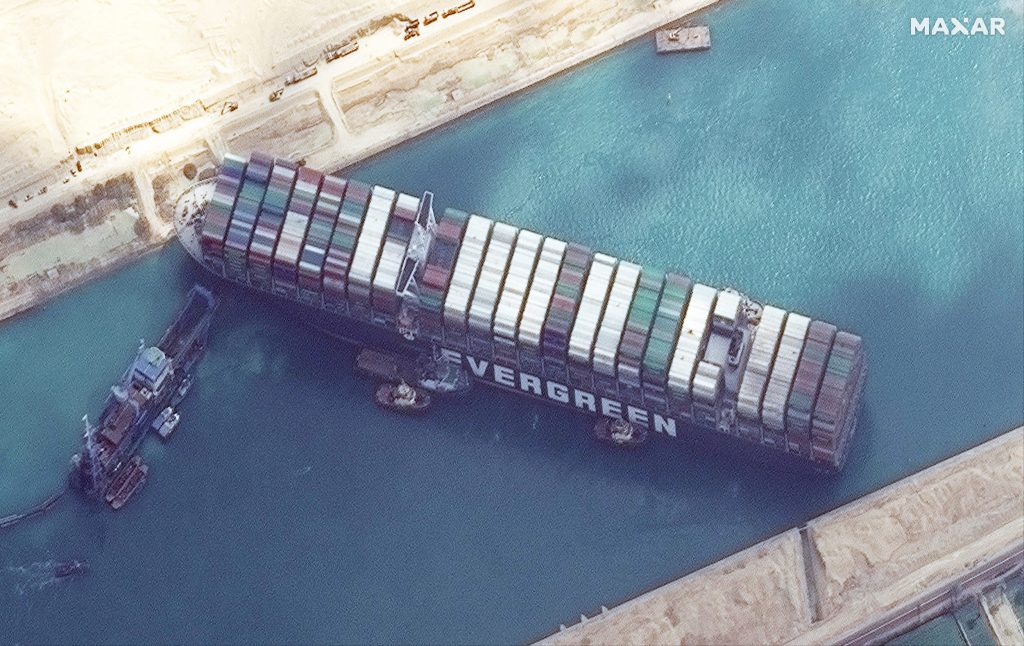

While the spirit of the transformation is a genuinely good impulse, the execution of radically transforming a large and established enterprise organization is one that comes a huge amount of challenges. Shifting the technology that folks are expected to use can be done by simply altering the requirements of the job, but changing the culture, processes, and expectations by which software is created is a much bigger obstacle. The saying around the office is that “big ships turn slowly”.

Background on Organizational Transformation in Software

Up until the digital transformation, developers at my company have been tasked with traditional responsibilities, and the development workflow looked like one that is standard at many large organizations. Although the following outline is not identical to that practiced by my company, it is not far off. In addition, it is likely similar to many practiced by many companies worldwide. Many, many organizations deliver value to their customers using a variation of the waterfall method:

- The business spends time gathering requirements and performing research into the market space. It determines that a product needed to be created.

- In collaboration with technical leads, the business develops a product vision.

- Technical leads would create implementation plans and a system design.

- Developers wrote code to fulfill business requirement in their local environment.

- Developers ask the “Build and Release” Engineers to deploy the code to a development environment. A BR Engineer would make sure that the correct coding patterns were followed such that deployment was possible (Is config management specified according to the XL Deploy requirements? Does the Jenkins pipeline have the correct

mavencommands to build the artifact? Are the runtime requirements clear?). - After deployment to a development environment, developers can collaborate on making features and bugfixes until they’re satisfied.

- QA people are looped in to test that basic requirements are fulfilled and there aren’t any showstopping bugs. If there are, return to step 4.

- BR Engineers are again pulled in to deploy to a more stable environment with change controls enabled. That stable environment is where load testing, integration testing, security scans, static code analysis, and systems testing occurs. Most of the testing will be automated but a large amount will be manual. If tests fail, return to step 4.

- Code is packaged up into larger releases and moved to the UAT environment. Here, business folks can get a look at the features, give feedback, and possibly send the code back for revisions. If everything looks good, deployment to production happens during a maintenance window. Otherwise, return to step 4.

- Operation teams in an offshore NOC are largely responsible for monitoring the operation of the product, escalating issues to the development team if a problems is determined to be caused by faulty code rather than adverse operating conditions.

This approach has demonstrated historical success. It is also falling out of favor for many reasons. The reason I’d like to focus on is the amount of handoffs that are required between different parties as well as the hyper-specialized responsibilities in order for project success. At each step where code “goes” somewhere it involves delicate handoffs to other teams or roles and creates opportunities to introduce failures. Worse than that, it creates performance bottlenecks in the ability to deliver something from start to finish. Even worse still, it creates siloed responsibilities that create divisions between teams. Whenever there’s an incident in production, it is easy for different tribes to point fingers. “This code is crappy!” “The deployment failed because operations don’t understand my software!” “QA didn’t test this well enough!” These are phrases one might hear when discussing the outcome of an incident. Cloud providers and savvy tooling folks realized this many years ago and began pioneering “DevOps”, which reduced the barriers between the developers and operators of software products. Software developers are now empowered to own their code from authorship to operation. That means they have a lot more on their plate.

The Expanding Scope of Developers’ Responsibilities

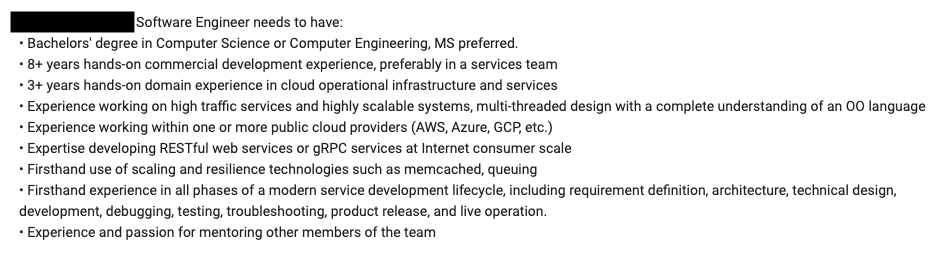

In a hiring managers fantasy, software developers are rockstars that are capable of owning the entirety of the software development lifecycle. They’re experts in not only crafting elegant code, but delivering it to customers automagically at lightening speed. Any errors are resolved with automation for a seamless user experience. No operations teams, testing is accomplished by Software Development Engineers in Test (SDET) whose testing is fully automated, and the delivery platform takes care of the security, compliance, auditing, and necessary change controls. Why hire multiple teams when you can pay a single salary to do everything?

Developing software is no longer just writing code. For a lot of my peers, writing code is something that they less than half their time on. The rest of it is figuring out how to deploy the code, learning the tools that are used for the deployment, debugging various issues related to external dependencies, and trying to figure out why the heck their build pipeline turned red without a code change. While a lot of these issues used to be the responsibility of entire different teams (networking team, build and release team, infrastructure team, etc), individual developers are now on the hook. It is reasonable that the cognitive load is simply much more than is reasonable to handle.

DevOps as a Catalyst for Delivery Platforms

In the past ten years, businesses caught on that DevOps was a good idea and have been pushing for it as a paradigm shift. Unfortunately, the training, mentality, cultural shifts, and necessary management support don’t always come overnight. If that’s the case, you end up with huge projects to “transform” the culture and mindset of a company. With developers now tasked with “products” that they must build, deploy, and operate themselves rather than “projects” that they can create and then hand off, their responsibilities expanded as well. Even as highly skilled as software engineers are, there’s only so much knowledge and time any single individual can have. This is why automated tooling becomes very important. How can one change the build, release, testing, change control, production support, and other processes encapsulated in the above model without the assistance of tools? This brings me all the way back to the internal platform. Humanitec has a great writeup on the “how” and “why” of an internal platform. Coincidentally, they’re also a vendor of a proprietary Internal Developer Platform (IDP). Go figure.

Humanitec Internal Developer Platform connects your setup to automate Ops overhead and set clear golden paths for developers. It enables true self-service by letting developers running their apps and services autonomously.

Humanitec’s sales pitch

The platform envisions that Operation teams create “golden paths”. These are self-service packages for developers with an automated and validated infrastructural solution for whatever an application may need. Does your product require a NoSQL database? The platform can spin up a CosmosDB instance on Azure with the click of a button, fully optimized for your company’s preferred configuration. Infrastructure provisioned by the platform integrates with monitoring and alerting automatically. Developers can focus on writing code and tuning the infrastructural defaults to their business use case. When things go wrong, developers are on point to resolve them. Everything becomes about speed, efficiency, and decisions driven by monitoring and observing the behavior of the system. There’s no longer a long chain of handoffs and potential breakdowns in the route to production.

The point of all this background on organizational transformation and DevOps is that when creating a platform tool, your customers are your developers. In my experience, asking developers to expand the scope of their responsibility to include learning how to operate a platform built on technology they aren’t familiar with has come with a lot of challenges. While I hesitate to say that the challenges could be overcome with a more usable platform, the cognitive load upon developers would be decreased. For that reason alone, prioritizing usability is worth focusing on.

The Importance of Platform Usability

The advantages of a self-service platform seem undeniable. If you can truly get developers to operate at lightening speed as well as creating reusable and reliable infrastructure for them, what’s the downside? While there may be no downsides, in reality it is difficult to develop something that can be used as a self-service developer platform. It requires dedicated developers familiar with infrastructure and automation to create solutions. It also requires collaboration with the “customers” of the platform in order to make sure that any solution developed will actually be useful. Furthermore, it requires input from adjacent teams to make sure the infrastructural solution fulfills security, auditing, and any other requirements that might block production deployments. Even if all of those boxes are checked off, there is still a major obstacle in front of the platform’s adoption: usability.

In order for the platform to become something that developers actually want to use, it cannot be overly onerous to begin using it. Anyone who has experience attempting to get a new framework adopted by a development team has a few compelling selling points that can be leveraged.

The first is the most obvious: the stick. If you simply require that developers use your platform, they will. If a required technology is a pain in the ass to use, developers won’t last long and neither will your business. Finding good software engineers is challenging. Maintaining happy developers is paramount and forcing them to use a crappy product will result in stress, resentment, burnout, and turnover. Simply changing the requirements is not usually a good way to increase platform adoption.

The second is more reasonable, but ultimately about as ineffective: the carrot. If you simply ask developers to use a brand new product, it likely won’t go well. Unless you can demonstrate absolutely overwhelming evidence that the product will do one or more of the following:

- Save them time and effort

- Increase their salaries

- Solve challenging problems

there’s not a high likelihood that it will be adopted. Engineers respond to incentives. When asked to use a new product without a proven track record of success, you’re essentially asking Engineers to become unpaid beta testers. Worse than that, if you can’t guarantee platform stability, you’re asking Engineers to beta test a product that actively makes their lives harder. Even if you can guarantee stability, if your product is unintuitive you are still asking Engineers to increase cognitive load. Engineers are smart and hardworking people, but they do not respond well to increasing their mental load in order to accomplish their goals. When coupled with the expanding scope of the software engineering profession, increasing both the responsibilities of the practitioner and learning curve of their tools is a recipe for disaster.

The third approach is the most reasonable. I call it the sticky carrot. Incentivize adoption initially and require it later. This approach is beneficial to both the platform and the developers that are using it. The generalized approach is something like this:

- Identify a competent member of engineering leadership with a desire to adopt DevOps philosophies

- Work to create a small team of engineers with various skill levels and open minds

- Work on either a greenfield product or a legacy product with a small codebase

- With the small group, iterate on feedback between the platform and the product delivered with it

- In parallel, begin adopting DevOps processes on other teams

- When the platform is stable enough for GA, identity successes with pilot team

- Set a timeline for required adoption from everyone

- Begin porting over other teams, led by the initially adopted team

Of course, this approach is just a framework and is not intended to be prescriptive. The importance of the usability of the platform cannot be overstated. The bolded step requires actual feedback and collaboration between the two teams responsible. Working diligently to increase the usability of the platform will pay dividends down the road when asking for widespread adoption. A completely mature developer platform can have many features, but it is important to first deliver a usable product that fulfills the minimum requirement that makes a development team more productive.

An Illustrative Example

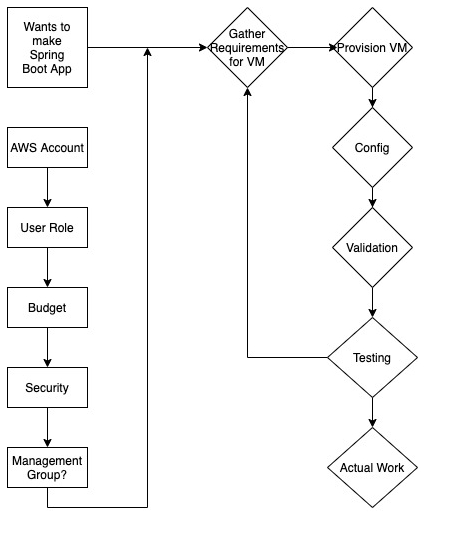

At an absolute baseline, think of a radically simple delivery pipeline for a AWS VM for developers. The ask is: development team needs a publicly available VM to deploy a Java Spring Boot application to serve an API. The API isn’t even attached to a database. Without a platform, that process would involve the manual steps of a developer going into the AWS console, provisioning an EC2 instance according to their best guess of the requirements, SSH’ing into the instance, downloading required dependent packages, and testing connectivity to the public IP. In addition, that developers user account and ability to provision resources would have to be set up as a prerequisite.

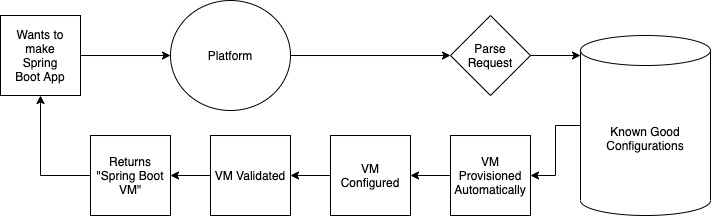

This workflow is error-prone, tough to repeat, and scales linearly with the amount of VMs necessary. In order to make this easier, a platform hooked up to a few tools that many practitioners will be familiar can greatly improve the efficiency of the process. To start, the platform team could use an IaC tool like Terraform to provision an EC2 instance, an Ansible playbook to install Spring Boot dependencies, and a quick availability test using curl to validate public IP connectivity. Developers could then run this automation on their laptops to increase speed and productivity. At this point, two parallel tracks of work can begin: developers can tweak the terraform modules and Ansible playbooks to make them more useful, and the platform team can begin working on an automated interface that the development team can use. It can be as simple as an API with a request/response interface where developers can request “VM for Spring Boot” and the response will be an identifier for the EC2 instance provisioned with this automated process.

A Toy Implementation

The reliability and usability of a platform is paramount. When building the Spring Boot VM from the previous example, if the platform only delivers a working and correctly configured VM 60% of the time, it is not a very usable platform. Likewise, if the “platform” is sending an email to the “DevOps Guy” and waiting an indeterminate amount of time before the VM is available, it is also not very usable. However, what if your platform allowed for a fully authenticated way to provision the VM from any developers command line? If a developers is logged in through the AWS CLI, or has some other way of providing credentials, the platform could do all of this with a simple interface:

$> ./platform --vm

Please select the VM you'd like to provision: 1

1. Java Spring Boot (dev)

2. Java Spring Boot (prod-ready)

3. golang Binary (dev)

4. golang Binary (prod-ready)

5. more options

Provisioning VM: --[ Spring Boot (dev) ]--

Validating AWS connectivity...

Validating AWS credentials...

Obtaining VM Config...

Provisioning VM...

Configuring VM...

Validating VM connectivity...

Entering VM into Live VM Database....

Your new VM has id <resource-id> and is publicly available at 1.2.3.4. Your public SSH key has been added to authorized_keys file.Granted, an actual GUI would be much easier, but this is without a doubt quicker than a developer manually provisioning and configuring through the AWS console. In addition, the platform could automatically detect the Role or User that is invoking it and use that information to limit the list of VMs that would be reasonable for that developer to provision. A “Live VM Database” could keep track of the actively provisioned VMs to automatically clean them up when no longer in use. There are many different ways to optimize the platform to give developers the best possible experience when using it.

Advanced Use Cases

Automatically provisioning the VM and giving it to the developer in a usable state might already be a huge win, but there will inevitably be tweaks that need to occur. For example, a developer who is knowledgable about Spring Boot might realize that the VMs used to provision are memory constrained because they were optimized for vanilla Spring Boot, but do not have enough RAM to run under load. This makes using the platform frustrating, because the magic of automatically getting a VM will not impress developers who cannot use that VM for their everyday work. In response to this, more control can be exposed to the users in the form of “advanced” configuration options.

$> ./platform --vm \

--vm-type='java-spring-boot' \

--environment='dev' \

--advanced-options='RAM,CPU'

Please select the minimum RAM for your VM: 3

1. 4Gb

2. 8Gb

3. 16Gb

4. 32Gb

5. More

Please select the minimum vCPUs for your VM: 3

1. 2

2. 4

3. 8

4. More

Provisioning VM: --[ Spring Boot (dev) - customized ]--

Validating AWS connectivity...

Validating AWS credentials...

Obtaining VM Config...

- Minimum RAM: 16 Gb

- Minimum CPU: 8 vCPUs

... selecting EC2 Instance Type...

Instance Type Found: a1.2xlarge

Provisioning VM (a1.2xlarge)...

Configuring VM...

Validating VM connectivity...

Entering VM into Live VM Database....

Your new VM has id <resource-id> and is publicly available at 1.2.3.4. Your public SSH key has been added to authorized_keys file.By creating sensible defaults while still exposing advanced configuration options to your developers, you’ve allowed for both use cases to filter through the platform. The actual machine instance type is not generally a problem, it is the configuration of the machine type that adds toil. By standardizing the format that developers use to provision their VMs, you can decrease the amount of time and handoffs between developers and a usable product.

Wrap Up

This post ended up being a lot longer than I intended it to be. The real meat and potatoes of it is that usability for internal tools cannot be ignored. Even when the products that you build are strictly for internal consumption, focusing on usability will yield huge dividends for everyone involved. In an industry where the actual technological framework of daily workflows changes rapidly, giving your teammates tools that are intuitive and user-friendly is critical to long-term success. Software Developers must juggle many priorities and responsibilities. They’re expected to be able to translate ambiguous requirements to concrete data points, develop features to address them from scratch, and automatically deliver them to customers on a reliable cadence. What used to be an entire team of professionals has been collapsed into a single role!

With the pace of the industry only increasing, the job is destined to scale in difficulty. Don’t make it harder on them!